Building a Terminal Chat CLI with Typer and OpenAI

Introduction

Typer makes it easy to create intuitive, type-safe commands, and integrating OpenAI adds the power of conversational AI. CLI tools fit naturally into developer workflows. Integrating OpenAI via Typer means users can leverage AI capabilities without leaving the terminal, boosting productivity and maintaining focus.

Prerequisites

- Python 3.7+

- UV package manager (pip install uv)

- OpenAI account

Setting up your environment

- Create virtual environment: uv create friday-cli

- Navigate to the project: cd friday-assistant

Install library: uv add openai typer openai peewee

Folder Structure

We have the following structure, this is important because it will affect the package step:

friday-cli/

├── cli/

│ ├── __init__.py

│ ├── main.py # OurCLI entry point

│ ├── modules/

│ │ ├── __init__.py

│ │ ├── database.py

│ │ ├── assistant.py

│ │ ├── tool_calls.py

├── pyproject.tomlDatabase module

This module is designed to store and manage configuration settings in a SQLite database using Peewee. It includes simple functions for setting and retrieving key-value pairs and initializing the database table.

from peewee import *

import os

db = SqliteDatabase('database.db')

class OpenAIConfig(Model):

id = AutoField(primary_key=True)

key = CharField(max_length=255, unique=True)

value = CharField(max_length=255)

class Meta:

database = db

def get_config(key: str):

return OpenAIConfig.get(OpenAIConfig.key == key).value

def set_config(key: str, value: str):

config = OpenAIConfig.select().where(OpenAIConfig.key == key).first()

if config:

config.value = value

config.save()

else:

OpenAIConfig.create(key=key, value=value)

def check_db_exists():

return os.path.exists("database.db")

def init_db():

db.connect()

db.create_tables([OpenAIConfig], safe=True)

db.close()

Build assistant model

For instructions on setting up the OpenAI API, please refer to this link. Below are the steps for building the DevOps Assistant assistant.py:

Create a Thread:

The create_thread function initializes a new thread within the OpenAI system. Threads act as containers for conversations and maintain context across messages.

def create_thread():

client = OpenAI(

api_key=get_config("api_key")

)

return client.beta.threads.create().id

Create an Assistant

- Name and Instructions:

The assistant is named DevOps Assistant and receives detailed instructions about its role and behaviour:- Respond concisely to “how to” questions.

- Restrict its scope to DevOps-related topics.

- The default is to use docker-compose.yml if a file path isn’t provided.

- Model:

You can specify the OpenAI model, such as gpt-4 or gpt-4-turbo. - Tools:

The assistant is equipped with tools to execute specific actions, like managing Docker containers (remove_containers, start_containers, etc.). Each tool is defined with:- Name: What the tool is called.

- Description: A brief explanation of what the tool does.

- Parameters: The arguments the tool accepts.

def create_assistant(model: str):

client = OpenAI(

api_key=get_config("api_key")

)

assistant = client.beta.assistants.create(

name="DevOps Assistant",

instructions="""

You are a helpful assistant specialized in DevOps tasks. Follow these rules:

1. If the user asks "how to", respond only with the relevant command needed to complete the task. Do not provide additional explanations unless explicitly requested.

2. Do not execute any functions unless the user explicitly instructs you to do so.

3. If the user mentions a task that requires a file path but does not provide one, default to using docker-compose.yml.

4. Restrict your responses to topics strictly within the scope of DevOps. Ignore questions unrelated to this context.

""",

model=model,

tools=[

{

"type": "function",

"function": {

"name": "remove_containers",

"description": "Remove docker portfolio containers",

"parameters": {

"type": "object",

"properties": {

"file_path": {

"type": "string",

"enum": ["~/portfolio/docker-compose.yml"],

"description": "The path to the docker-compose file."

}

}

}

}

},

{

"type": "function",

"function": {

"name": "start_containers",

"description": "Start docker portfolio containers",

"parameters": {

"type": "object",

"properties": {

"file_path": {

"type": "string",

"description": "The path to the docker-compose file."

}

}

}

}

},

{

"type": "function",

"function": {

"name": "restart_containers",

"description": "Restart docker portfolio containers",

"parameters": {

"type": "object",

"properties": {

"file_path": {

"type": "string",

"description": "The path to the docker-compose file."

}

}

}

}

}

]

)

return assistant.id

Handle Tool Calls

This function processes tool calls requested by the assistant during a conversation. It executes the appropriate tool based on the assistant’s instructions.

def handle_tool_call(thread_id: str, run_id: str, tool_calls: list):

client = OpenAI(

api_key=get_config("api_key")

)

tool_outputs = []

for tool_call in tool_calls:

result = None

if tool_call.function.name == "remove_containers":

args = json.loads(tool_call.function.arguments)

print(args)

result = remove_containers(args["file_path"])

elif tool_call.function.name == "start_containers":

args = json.loads(tool_call.function.arguments)

result = start_containers(args["file_path"])

elif tool_call.function.name == "restart_containers":

args = json.loads(tool_call.function.arguments)

result = restart_containers(args["file_path"])

if result:

tool_outputs.append({

"tool_call_id": tool_call.id,

"output": result

})

run = client.beta.threads.runs.submit_tool_outputs_and_poll(

thread_id=thread_id,

run_id=run_id,

tool_outputs=tool_outputs,

)

if run.status == "completed":

return client.beta.threads.messages.list(thread_id=thread_id).data[0].content[0].text.value

elif run.status == "failed":

return f"Error from assistant: {run.last_error}"

raise Exception("Unknown run status")

Chat with the Assistant

This function handles user interactions with the assistant, including sending messages and processing responses.

def chat_with_assistant(assistant_id: str, thread_id: str, message: str):

client = OpenAI(

api_key=get_config("api_key")

)

client.beta.threads.messages.create(

thread_id=thread_id,

role="user",

content=message

)

run = client.beta.threads.runs.create_and_poll(

thread_id=thread_id,

assistant_id=assistant_id,

)

if run.status == "requires_action":

tool_calls = run.required_action.submit_tool_outputs.tool_calls

print(tool_calls)

return handle_tool_call(thread_id, run.id, tool_calls)

elif run.status == "completed":

return client.beta.threads.messages.list(thread_id=thread_id).data[0].content[0].text.value

elif run.status == "failed":

return f"Error from assistant: {run.last_error}"

raise Exception("Unknown run status")

Building CLI

This CLI tool is designed to interact with the DevOps Assistant and perform actions like chatting with the assistant, configuring models, and initializing the environment. Create a main.py file. This code defines a simple CLI to manage a DevOps Assistant, enabling interaction through three commands (init, config, and chat). It uses Typer to manage the CLI interface and integrates with a database to store configuration details like API keys, models, and assistant thread information. The assistant is powered by OpenAI and can be configured with different models for different types of tasks.

from typer import Option

import typer

from cli.modules.database import init_db, set_config, get_config

from cli.modules.assistant import create_assistant, create_thread, chat_with_assistant

app = typer.Typer()

models = {

"1": "gpt-3.5-turbo",

"2": "gpt-4o",

"3": "gpt-4o-mini"

}

@app.command(name="chat")

def chat(message: str):

if not message:

typer.echo("Please provide a message to ask Friday assistant")

return

assistant_id = get_config("assistant_id")

thread_id = get_config("thread_id")

try:

response = chat_with_assistant(

thread_id=thread_id, assistant_id=assistant_id, message=message)

typer.echo(response)

except Exception as e:

typer.echo(e)

@app.command(name="config")

def config(model: str = Option(..., "--model", help="The model to use for Friday assistant")):

if model not in models.values():

typer.echo("Invalid model")

return

set_config("model", model)

typer.echo("Model set to " + model)

return

@app.command(name="init")

def init():

typer.echo("Initializing...")

init_db()

api_key = typer.prompt("Enter your OpenAI API key")

set_config("api_key", api_key)

typer.echo("Please select a model:")

for key, value in models.items():

typer.echo(f"{key}. {value}")

model_number = typer.prompt("Enter the number of the model you want to use")

model = models[model_number]

set_config("model", model)

thread_id = create_thread()

set_config("thread_id", thread_id)

assistant_id = create_assistant(model)

set_config("assistant_id", assistant_id)

typer.echo("Initialization complete")

if __name__ == "__main__":

app()

Package CLI For Distribution

pyproject.toml is a configuration file used in Python projects to specify build system requirements, package metadata, dependencies, and other project settings in a standardized way. It was introduced in PEP 518 to simplify packaging and provide a unified way for tools like setuptools, flit, and poetry to interact with a project.

[project]

name = "friday"

version = "0.1.0"

description = "Friday assistant"

readme = "README.md"

requires-python = ">=3.11"

dependencies = [

"openai>=1.56.0",

"peewee>=3.17.8",

"pyperclip>=1.9.0",

"python-dotenv>=1.0.1",

"typer>=0.14.0",

]

[build-system]

requires = ["setuptools >= 61.0"]

build-backend = "setuptools.build_meta"

[project.scripts]

friday = "cli.main:app"

[tool.setuptools.packages.find]

where = ["."][project]: Defines metadata for your project.

- name: The project name, "friday".

- version: The project version, "0.1.0".

- description: A brief description, "Friday assistant".

- readme: Specifies the file that contains the README (usually for documentation).

- requires-python: Specifies the minimum Python version required (>=3.11).

- dependencies: Lists external libraries your project depends on, such as openai, peewee, and type.

[build-system]: Specifies the build requirements for the project. You're using setuptools as the build backend.

- requires: Lists the tools needed to build the package, specifically setuptools >= 61.0.

- build-backend: Specifies that setuptools.build_meta is the backend used to build your project.

[project.scripts]: Registers a CLI command (friday) that maps to cli.main:app, so you can invoke your CLI via the friday command in the terminal.

[tool.setuptools.packages.find]: Directs setuptools to discover packages in the current directory ("."). It will search for any Python packages in the specified directory.

Then we run the command uv build and we check in the dist folder exist 2 files with extenions: .whl and gz. To install the package we need to run: pip install dist/file_name.whl or uv add dist/file_name.whl

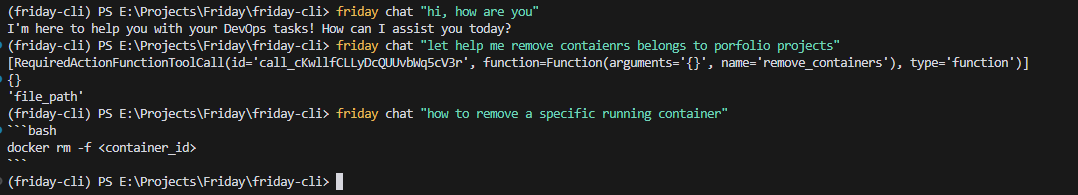

Test The CLI

After installation successfully, we can use it as normal other CLI. Let's try it:

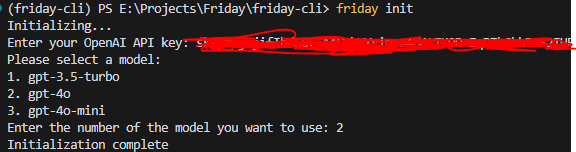

Init the Model

we will try to ask a simple question, a general question and a question for a specific context.

Conclusion

This approach streamlines communication with the assistant and makes DevOps tasks more efficient through an easy-to-use terminal interface. With Typer’s simple command structure and OpenAI’s powerful language models, you can build a custom assistant capable of automating and assisting with a variety of DevOps workflows.

This CLI app serves as a starting point for further enhancement, such as adding new tools, expanding the assistant’s capabilities, or integrating with other DevOps services.