My First Encounter with OpenAI Assistant API

Introduction

Artificial intelligence has reached an inflection point, and OpenAI's Assistant API is at the forefront. This revolutionary technology promises seamless integration, unparalleled language understanding and generation capabilities. In this blog post, I'll share my firsthand experience experimenting with OpenAI Assistant API.

Prerequisites

- Python 3.7+

- UV package manager (pip install uv)

- OpenAI account

Setting up your environment

- Create virtual environment: uv create weather-assistant

- Navigate to the project: cd weather-assistant

- Install OpenAI API library: uv add openai

- Install dotenv: uv add python-dotenv

Configure OpenAI API

- Create API key: Settings > API keys > Create new secret key

- Add API key into .env file : OPENAI_API_KEY=YOUR_API_KEY_HERE

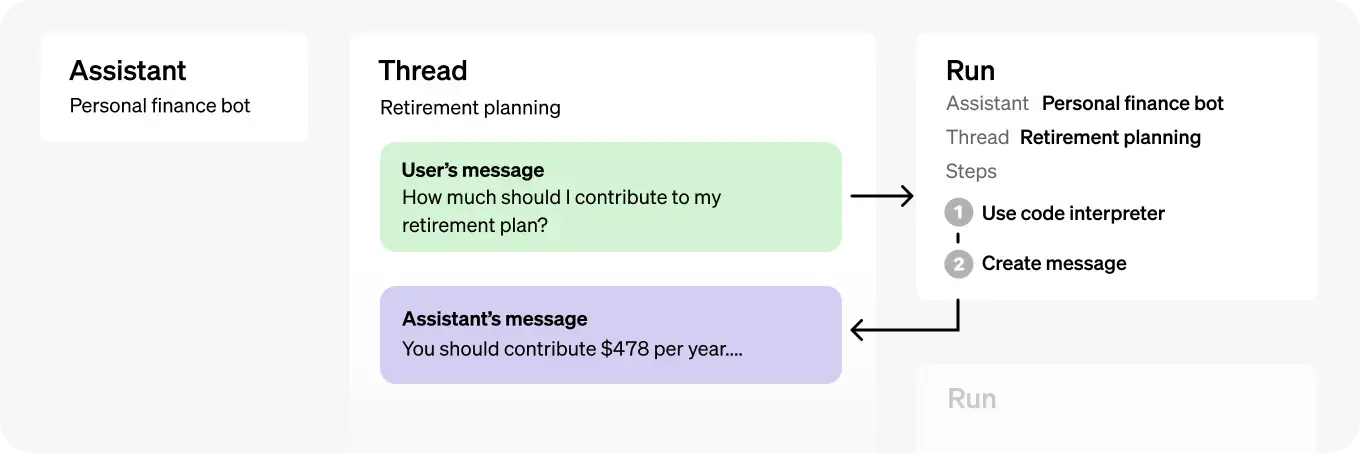

Build Assistant

Build AI-powered assistants into your apps with OpenAI's Assistants API. Assistants leverage instructions, models, and tools to answer user queries, as well as support code interpretation, file search, and function calls.

Load environment variable and init OpenAI Client:

from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

client = OpenAI(

api_key=os.getenv("OPENAI_API_KEY")

)Next, we need to provide a function for tool_call, we create a simple function to fake data and return a meaningful result:

def fetch_data(location: str, date: datetime):

return f"Weather data for {location} on {date} is sunny and 22°C"Then, we create functions create assistant and thread, this function helps us configure and deploy an assistant with the following features:

- Custom Name: "Weather Assistant".

- Behavioural Instructions: Guides the assistant on how to handle queries.

- Model Selection: Allows the user to choose between gpt-3.5-turbo, gpt-4o, and gpt-4o-mini.

- Tool Integration: Includes a weather-fetching function (fetch_data) and a code interpreter tool.

def create_assistant(model: Literal["gpt-3.5-turbo", "gpt-4o", "gpt-4o-mini"]):

assistant = client.beta.assistants.create(

name="Weather Assistant",

instructions="""You are a helpful assistant capable of checking weather information.

Use the fetch_date to get the weather data, you must convert the date to ISO format before using

Please do not answer any questions that are outside the context of weather.

Always prioritize using the functions I have provided before generating your own responses.""",

model=model,

tools=[

{

"type": "code_interpreter"

},

{

"type": "function",

"function": {

"name": "fetch_data",

"description": "Get weather data for a given location and date. The date should be in ISO format (YYYY-MM-DDTHH:MM:SS).",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The location to fetch data for"

},

"date": {

"type": "string",

"format": "date-time",

"description": "The date in ISO format (YYYY-MM-DDTHH:MM:SS)"

}

},

"additionalProperties": False,

"required": ["location", "date"]

}

}

}

]

)

return assistant.id

def create_thread():

return client.beta.threads.create().id

After creating the assistant function, this function processes tool calls within a specific thread and runs. It checks the tools requested by the assistant, executes the corresponding functions, and submits the outputs back to the assistant's thread.

def handle_tool_call(thread_id: str, run_id: str, tool_calls: list):

tool_outputs = []

for tool_call in tool_calls:

if tool_call.function.name == "fetch_data":

args = json.loads(tool_call.function.arguments)

date = args.get("date")

if date and date.lower() == "now":

date = datetime.now().isoformat()

result = fetch_data(args["location"], date)

print(result)

tool_outputs.append({

"tool_call_id": tool_call.id,

"output": result

})

run = client.beta.threads.runs.submit_tool_outputs_and_poll(

thread_id=thread_id,

run_id=run_id,

tool_outputs=tool_outputs,

)

return client.beta.threads.messages.list(thread_id=thread_id).data[0].content[0].text.value

Final one, we need to build a function to interact with OpenAI API. This function enables interaction with an OpenAI Assistant created using the API. It:

- Sends a user query to the assistant.

- Manages the assistant's response lifecycle, including tool invocations and error handling.

- Ensures that the assistant uses tools when necessary, such as fetch_data for weather queries.

def chat_with_assistant(assistant_id: str, message: str, thread_id: str):

# Create message

client.beta.threads.messages.create(

thread_id=thread_id,

role="user",

content=message

)

# Poll the response

run = client.beta.threads.runs.create_and_poll(

thread_id=thread_id,

assistant_id=assistant_id,

additional_instructions="convert the date into ISO format",

)

if run.status == "requires_action":

tool_calls = run.required_action.submit_tool_outputs.tool_calls

return handle_tool_call(thread_id, run.id, tool_calls)

elif run.status == "completed":

return client.beta.threads.messages.list(thread_id=thread_id).data[0].content[0].text.value

elif run.status == "failed":

return f"Error from assistant: {run.last_error}"

raise Exception("Unknown run status")

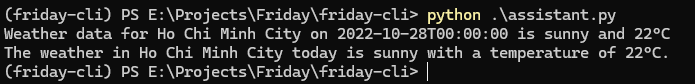

Now let's test it:

if __name__ == "__main__":

assistant_id = create_assistant("gpt-3.5-turbo")

thread_id = create_thread()

print(chat_with_assistant(

assistant_id, "how's the weather in Ho Chi Minh City today?", thread_id))

Here is the result. We can see that the model can understand the context and execute our fetch_data and response naturally.

Conclusion

Through this example, you now have an overview of how to integrate with the OpenAI Assistant API and create your own customized assistant. From configuring tools like fetch_data to managing conversations and tool calls, this workflow showcases the flexibility and power of building domain-specific assistants. Whether it’s for weather updates, data analysis, or specialized tasks, the OpenAI Assistant API provides the tools you need to craft intelligent and interactive solutions tailored to your needs.

Now it’s your turn to experiment and unleash the full potential of AI assistants in your projects!